Identity Protocol Gut Check

Protocol gut check. That's how someone recently described some research I've got under way for a report we're calling the "TechRadar™ for Security Pros: Zero Trust Identity Standards," wherein we'll assess the business value-add of more than a dozen identity-related standards and open protocols. But it's also a great name for an episode of angst that recently hit the IAM blogging world, beginning with Eran Hammer's public declaration that OAuth 2.0 — for which he served as a spec editor — is "bad."

As you might imagine, our TechRadar examination will include OAuth; I take a lot of inquiries and briefings in which it figures prominently, and I've been bullish on it for a long time. In this post, I'd like to share some thoughts on this episode with respect to OAuth 2.0's value to security and risk pros. As always, if you have further thoughts, please share them with me in the comments or on Twitter.

"Consumer versus enterprise" is less meaningful now. Eran opines that "At the core of the problem [of OAuth 2.0's design versus 1.0] is the strong and unbridgeable conflict between the web and enterprise worlds." I see just the opposite. We're headed to the identity singularity, in which intra-enterprise, B2B, B2C, SaaS, mobile, you-name-it use cases all start melding, and identity and access context must flow readily between them. Where Eran is pessimistic, I'm optimistic: OAuth's directions reflect the web world's ability to solve distributed computing problems in a way that the enterprise world has struggled with. If enterprise players don't come to the table, how can we hope for better than we've had in that world? As Tim Bray eloquently (ahem) points out, "Google offers a buttload of APIs. They are used by a frightening number of people (and robots) coming from a frightening variety of software platforms. Some of them involve the interchange of large amounts of real-money value. For a large and increasing proportion, if you want to get your app authorized for the API, it’s OAuth 2 or nothing." The API economy means it's not a web/enterprise celebrity deathmatch anymore.

Standards-making has evolved… When only formal standards development organizations did such work, people could sometimes forget that standards must compete in a landscape that offers alternatives, just like any other technology, product, or solution component. In the last decade, various dot-orgs and informal groups clashed more and more often in proposing directly competitive application-layer standards. I see signs that we've now entered into a new phase, where pressure from prospective end-users — the market! — forces these groups to consolidate and merge faster, more completely, and more usefully. This is what the people at the OAuth table (and the SCIM table, and the OpenID Connect/OpenID Artifact Binding table…) have done, and it generally helps tamp down the FUD (fear, uncertainty, and doubt) factor.

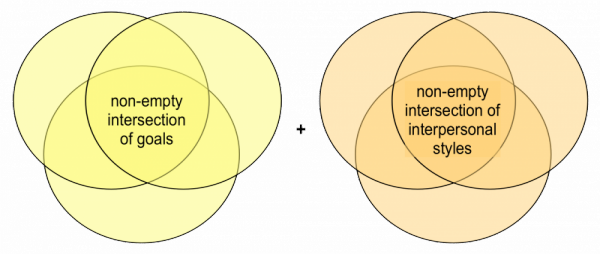

…but people remain the same. Successful standards work will always require these elements coming from its contributors:

What I believe went awry in this episode was primarily a mismatch of goals (well, interpersonal styles too). Eran has freely admitted that he was not participating for any personal or corporate interest in the result; most recently he said, "I stuck around as long as I could stand it, to fight for what I thought was best for the web. I had nothing personally to gain from the decisions being made." (It should be noted that at the IETF, when you have a spec editor's hat on, your job is to capture group consensus.) Not having skin in the game, whether an implementation, a product, or a keen shared need to solve a problem not already solved by OAuth 1.0, Eran as an individual contributor was free to advocate for solutions that were academically more "pure" but less connected to commercial and ecosystem reality. This made the work extra angst-ridden and drawn out, especially unfortunate in a cloud and mobile era that prizes agility. But the flood of product announcements around OAuth continues apace, and I'm seeing adoption and interest by IT end-users grow; maybe the delay just gave enterprises some breathing room. And now OAuth 2.0 is complete and about to be published as an IETF RFC (that is, a real live standard).

Being generative can add value. By generative, I mean "enabling unexpected reuse." The distributed computing world has seen a lot of supposedly modular and composable solutions come and go. Eran registered a complaint about OAuth starting as a protocol and ending as a framework, with all the complexity and interop challenges that this entails. But in record time, OAuth 2.0 has already proven to be valuable as a substrate for new solutions. I've talked before about OpenID Connect and UMA (N.B.: I chair the latter group) and the way they leverage OAuth. But developers and architects are also constantly specifying and experimenting with "OAuth.next" features, such as active token revocation and chained delegation, enabling rolling improvements with a realistic blend of "traction" and "sanction" (that is, market adoption and formal approval). This no doubt contributes to a potentially more confusing and complex standards environment, but a more vibrant one in which bad ideas fail faster.

A final personal note… In Eran's blog sequel, he made a kind mention of me and a somewhat dubious mention of UMA. I have a great deal of respect for Eran's sheer protocol-defining talent; he and Scott Cantor of SAML fame are peas in a spec-writing pod. A couple of years ago, the UMA group sought Eran's feedback, and his feedback was that UMA was too complex. 🙂 We subsequently worked hard not only to excise the complexity, but to leverage OAuth in plain-vanilla fashion. So while he may still be skeptical about whether UMA scratches the "user-managed access" itch, I hope he'll take the opportunity to reassess the modern version.