Voice Assistants Cannot Answer All Your Questions

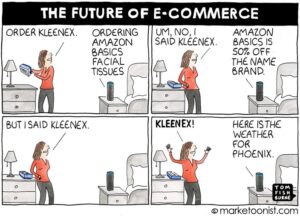

Let’s be honest: There is a lot of hype around voice assistants (or, as we call them at Forrester, intelligent agents, or IAs). Marketers, agencies, and vendors alike are all excited about this potential voice search future. But have you ever had a voice search experience with an IA like the cartoon below?

We wanted to conduct rigorous research to understand just how “intelligent” these intelligent agents are when it comes to answering commercial voice searches. We decided to:

- Come up with 180 commercial questions . . . We specifically wanted to ask the IAs questions that were commercially applicable, such as “What’s the best waterproof mascara?” or “Where can I get advice on my 401(k)?”

- . . . Across six industries . . . We chose questions within industries that are most relevant to Forrester’s client base: consumer packaged goods and retail, travel and hospitality, financial services and insurance, tech and telecom, healthcare, and automotive.

- . . . And ask our questions to each of the four major IAs. We chose the four most popular IAs to test to see what their “answers” to our voice search questions were: Amazon Alexa, Apple’s Siri, Google Assistant, and Microsoft’s Cortana.

What did we learn? Across all four intelligent agents, only a disappointing 35% of our voice search questions were answered. Our bar for passing was that the IA answered the question sufficiently or it delivered contextual, relevant, and helpful information beyond what the user expected.

So unfortunately, many of our voice searches went something like this:

“Alexa, what are the best types of dishwasher detergent?”

“Sorry, I don’t know that one.”

“Hey Google, what brands sell the softest men’s sweaters?”

“Sorry, I can’t help with that yet. I’m still learning.”

Intelligent agents’ lack of commercial applicability is highlighted by their:

- Failure to provide direct answers. Instead of providing a quick response, most of the time the IA made us go find the answer. For about one-third of our questions, we were redirected to “something on the web” for more information.

- Inability to understand context or conversation. The IAs rely heavily on Google or Bing search results for answers. But we found that when we added context or location to our questions, or if we had follow-up questions, the IAs were mostly unable to answer sufficiently.

- Bad user experience. We, as humans, engage in conversations all the time, and naturally, we are hyper-aware when responses we receive to our questions are too fast or too long. Specifically, at times, Alexa spoke without the regular human cadence of speech and Cortana blabbed on for more than 30 seconds at a time.

The full findings from our research just published today (available to Forrester clients).

We’re closely tracking the voice search space and the implications it has to future marketing mix strategies. What do you think? Set up an inquiry here if you want to learn more; I look forward to hearing your experiences in voice search!