Why Chatbots Can’t Read Your Mind

Chatbots — And Why They Can’t Read Your Mind

Chatbots are cropping up everywhere, from customer service to internal help desks, but what makes them tick?

When we interact with chatbots, we’re often coming in from the end-user side and confronting a chatbot window. When we ask a question, the chatbot may or may not be able to figure out what we’re saying.

Why is this?

Chatbots break down into two parts: the “chat” and the “bot.” The chat component of a chatbot is responsible for figuring out what the user is saying, and the bot is responsible for taking action/executing the request. In this post, we’re focusing on the chat aspect. We’ll talk about the bot aspect in a future post.

To make a good chatbot, you must have a good chat capability. In the context of chatbots, and put simply, natural language processing (NLP) and natural language understanding (NLU) are machine-learning techniques, used for the analysis of language, that combine three elements to process conversations, text, and other kinds of language-linked data.[i]

NLP is responsible for identification and breaking down of words and entities in a given sentence and, in effect, to help improve machine reading comprehension and provide more advanced intent processing.

Broadly, chat breaks down into three core elements:

- Language models

- Intent processing

- Entity identification

Here’s a simplified explanation of these components:

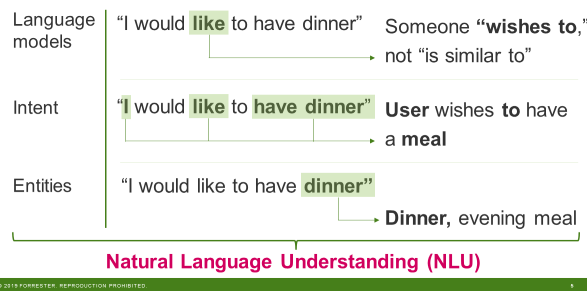

Language models, in the context of chatbots, usually refer to more than just a statistical language model — they’re also responsible for normalization, identification of words, identifying parts of speech, and mapping identified words to common entities (see below). This helps tell a machine, “this is the sentence.” If someone says, “I would like to have dinner,” language models also help identify that the “like” in this instance means “wishes to” rather than “is similar to.”

Intent processing figures out what a user is specifically asking for. In the dinner example, intent processing figures out that the user wishes to have a meal and is able to link this to relevant information.

Entity identification uses defined, logical concepts that can be represented in a model independently of any particular conversation. They may have specific connections, as in the sentence “dinner is an evening meal.” Some entities are a part of language models (such as being able to identify NYC as New York City and as a city in the US in the state of New York).[ii]

So Why Can’t The Chatbot Answer My Questions?

Good question, imaginary audience — it comes down to an issue in one of the chatbot’s functional sides. If it doesn’t understand what you’re saying, it’s a language training issue. There are a few other reasons why the chatbot may not be able to do what you ask, but these come from the bot side. The crux of these language training issues is that language is hard.

Because of a lack of training, the chatbot may not be able to answer your question because it doesn’t recognize the word or shorthand you’re using, or it doesn’t know what you mean by using certain words together, or it doesn’t understand why you’ve responded a certain way to a question it asked.

Language is complex, and having a system that can account for all elements of your sentence and context takes far more steps than we immediately think of when breaking down a sentence. To account for this, training must compensate for this complexity.

Every chatbot needs training. Most chatbot products these days come with language models, intent processing, and entity identification — or at least the capability to plug into a cloud-based NLP or NLU service.[iii]

But when you’ve purchased a chatbot product to use as your chatbot and users are having issues accessing services or completing requests that you know it can do, your problem is with the language training. Even having a “pretrained” model means nothing if the training isn’t actually relevant to what you’re doing.

Unfortunately, training has historically come from user usage. This is endlessly annoying to users, as they’re expected to use something that isn’t currently helpful in the promise that it will eventually be good.

Thankfully, a few ways around this solution exist today (we’ll go into detail in a forthcoming report on building a better chatbot in your organization), but vendors are increasingly working to assuage this weakness with vertical- or use-case-specific language capabilities. These will reduce the amount of enterprise-specific training required of chatbots before they’re truly useful for people.

Chatbots will get better over time, and certain chatbot vendors already demonstrate exceptional capability — but the rest of the market is catching up. The next hurdle is making the process of guided learning smoother so that unlucky developers aren’t spending days pouring over literally thousands of conversations, but that’s a problem for another blog post.

[i] Technically, NLP and NLU themselves aren’t subforms of machine learning. However, in the context of chatbots, these are NLP/NLU systems coupled with machine-learning algorithms; in effect, this is the application of machine learning to NLP for the purpose of enhancing natural language processing.

[ii] For those of you with a background in data or object modeling, these “entities” roughly correspond to logical entities or classes.

[iii] You could build a chatbot from scratch that doesn’t have any of these, but those are increasingly rare to interact with in an enterprise context beyond the use case of ChatOps.