The Cloud Is Disrupting Hadoop

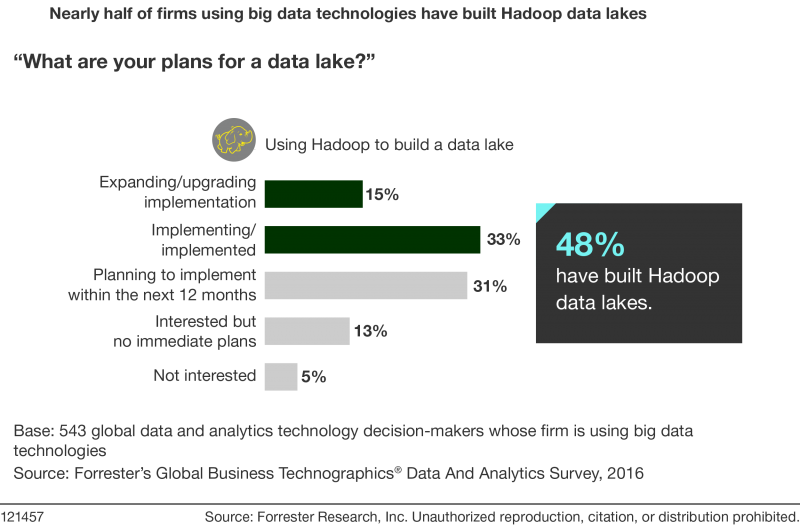

Forrester has seen unprecedented adoption of Hadoop in the last three years. We estimate that firms will spend $800 million in Hadoop software and related services in 2017. Not surprisingly, Hadoop vendors have capitalized on this — Cloudera, Hortonworks, and MapR have gone from a “Who?” to “household” brands in the same period of time.

But like any good run, times change. And the major force exerting pressure on Hadoop is the cloud. In a recent report, The Cloudy Future Of Hadoop, Mike Gualtieri and I examine the impact the cloud is having on Hadoop. Here are a few highlights:

● Firms want to use more public cloud for big data, and Hadoop seems like a natural fit. We cover the reasons in the report, but the match seems made in heaven. Until you look deeper . . .

● Hadoop wasn’t designed for the cloud, so vendors are scurrying to make it relevant. In the words of one insider, “Had we really understood cloud, we would not have designed Hadoop the way we did.” As a result, all the Hadoop vendors have strategies, and very different ones, to make Hadoop relevant in the cloud, where object stores and abstract “services” rule.

● Cloud vendors are hiding or replacing Hadoop all together. AWS Athena lets you do SQL queries against big data without worrying about server instances. It’s a trend in “serverless” offerings. Google Cloud Functions are another example. DataBricks uses Spark directly against S3. IBM’s platform uses Spark against CloverSafe. See the pattern?

As more firms get tired of Hadoop’s on-premises complexity and shift to the public cloud, they will look to shift their Hadoop stacks there. This means that the Hadoop vendors will start to see their revenue shift from on-premises to the cloud.

But serverless and Hadoop alternatives in the public cloud will gain traction, undercutting Hadoop revenue, unless the Hadoop community can give the industry a compelling reason to keep using all of Hadoop.

In our report, we cover several reasons firms may choose to make Hadoop the centerpiece of their big data strategy. First, and most important, is Hadoop’s potential to unify security, metadata, governance, and management across big data components and Hadoop’s friends. But the kicker is that security, metadata, governance, and management are exactly where the Hadoop vendors are trying to differentiate themselves. As a result, they are unable to join forces to preserve Hadoop’s place in an increasingly cloudy future, where Amazon, Google, IBM, and others are offering alternatives. Because of this, we think that in two to three years Hadoop will be more of a brand than a coherent distribution or product. We already see Hortonworks, Cloudera, MapR, and Pivotal divorcing themselves from the Hadoop vendor label because they see this trend.

As if that weren’t disruption enough, consider the impact that deep learning and AI will have on Hadoop. Just like Hadoop wasn’t designed for the cloud, it wasn’t designed to do the matrix math that deep learning requires. And the cloud crew is busy creating specialized AI-friendly environments, which means Hadoop vendors have even more work to do to keep their software relevant. Will they make Hadoop a platform for AI? Probably not.

Do not read this wrong. We think Hadoop has a future and will have strong growth for at least two to three more years, but ultimately it will take its place alongside data warehouses and mainframes. The pace of digital change in the age of the customer is simply too fast for any one technology to keep up for long.