No Testing, No Artificial Intelligence!

Don’t Put Your AI Initiatives At Risk: Test Your AI-Infused Applications!

In March of 2018, an Uber self-driving car killed for the first time: It did not recognize a pedestrian crossing the road. COMPAS, a machine-learning-based computer software system assisting judges in 12 courts in the US, was found by ProPublica to have harmful bias: It was discriminating between black and white people, suggesting to judges that the former were twice as likely to commit another crime than the latter and recommending longer detention periods for them before trial. I could continue with more examples of how AI can become harmful.

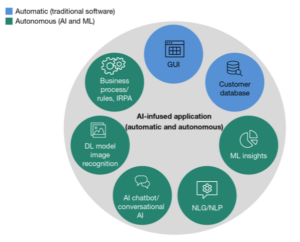

Enterprises are infusing their enterprise applications with AI technology and building new AI-based digital experiences to transform business and accelerate their digital transformation programs. But there is a chance that all these positives about AI could end, especially if we continue to see examples like this of delivering poor-quality, untested AI or AI that’s not properly tested for businesses and consumers. AI-infused applications are applications made of a mix of “automatic software” — the software we all have been building for years that is deterministic — and autonomous software, or software that is nondeterministic with learning capabilities. AI-infused apps see, listen, speak, sense, execute, automate, make decisions, and more.

The Future Of Apps Is Infused With AI

Why Is Testing AI-Infused Applications A Must?

- Because AI is being infused in strategic and more important enterprise decision-making processes, either assisting execs or professionals in key decisions or making those decisions alone (across many business domains from finance to healthcare).

- AI is also being used in systems responsible for human lives: self-driving cars, airplanes, cybersecurity systems, military systems, etc.

And as AI becomes more autonomous, the risk of these systems not being tested enough increases dramatically. Until humans are in the loop, there is hope that their bugs will be mitigated by humans making the right decision or taking the right action, but once they are out of the loop, we are in the hands of this untested, potentially harmful software.

What Does It Mean To Test An AI-Infused Application (AIIA)?

Since AI-infused applications are a mix of automatic and autonomous software, to test an AIIA involves testing more than the sum of all its parts with all its interactions. The good news is testers, developers, and data scientists know how to test 80% of AIIAs and can use conventional testing tools and testing services companies that are learning to do so; the bad news is there are areas of AIIAs that we don’t know how to test: In my recent report, I call this “testing the unknown,” and an example of “testing the unknown” happens when the new experience is generated by the AI. In fact, to test an AI-generated experience, we can’t predefine a test case as we would do for deterministic automatic software. Intrigued?

Read my latest report on AI, “No Testing Means No Trust In AI: Part 1,” to find out more. And since I am in the process of working on the second part of this research, if you are among the 26% who claim to know how to test AI capabilities, please reach out to me at dlogiudice@forrester.com, as I’d really like to hear about it.